Summary of Converging Realms: Law, Technology, and Society in the Age of Ethical and Multi-Agent AI Conference

Involving diverse stakeholders representing various perspectives is crucial in discussions surrounding the application and creation of AI solutions. Only this diversity will ensure a more holistic understanding of AI’s potential impacts and challenges, as different stakeholders bring unique insights and priorities to the table.

The intersection of different approaches towards AI, especially in mission-driven sectors – culture, education, research and justice, and an AI-focused exchange and dialogue between policy makers, the private sector, academia and a wider civil society were the ambition of the Converging Realms: Law, Technology, and Society in the Age of Ethical and Multi-Agent AI organised by the Future Law Lab in cooperation with Centrum Cyfrowe Foundation at the Jagiellonian University in Kraków on 26-27 September, 2024.

Main takeaway? Only by engaging voices from multiple professional backgrounds, AI solutions can at all attempt to be designed to be inclusive, equitable, and aligned with the broader societal good, helping to mitigate risks like bias or misuse of data. Interdisciplinary dialogue also provides the basis for building inclusive research teams with the potential to have an international impact on the development of science.

Ultimately, this multi-stakeholder approach is a necessary ingredient allowing to foster future more robust, sustainable, ethical, and responsible AI innovations and research. This is extremely important when thinking about AI developments and deployments (both general purpose and generative AI) in the so called mission driven sectors: culture, academia, education.Â

We might be going through an AI hype but at the same time the AI is there to stay and as Luciano Floridi states in his Ethics of Artificial Intelligence “Discussing whether the glass is full empty or half full is pointless. The interesting question is how we can fill it.”Â

The clash of interests between various stakeholders involved in the AI discussions clearly exists and was also reflected in the exchange, with the most often asked question being: how to support the development of innovative technologies while also protecting ownership, privacy and human rights of the creators and the society? Is it at all possible?

It seems that this ambition, to have the slightest chance to resonate, requires a long-term, balanced and multi-faceted approach. Key strategies should include:

- Ethical Frameworks: with exchanges and implementations of ethical guidelines and frameworks that prioritise human rights, such as fairness, privacy, and non-discrimination. These frameworks should be designed to guide innovators toward solutions that respect human dignity and equity. Interestingly, AI also may change the way we revisit ethical questions per se.

- Regulatory Oversight: Governments and regulatory bodies should establish and enforce regulations that promote responsible innovation, taking into account interests not only of the big actors, like big-tech companies or creators, but also the usually silent voices – researchers, educators, and the society at large. These regulations should be flexible enough to allow innovation and research to thrive but stringent enough to prevent harm, ensuring that new technologies are aligned with public interests.

- Inclusive Stakeholder Engagement: Engage diverse stakeholders—including public sector, tech developers, regulators, academics and civil society —early in the innovation process and policy discussions to avoid working in silos. This ensures that innovations account for the needs and concerns of all individuals, not just the powerful or well-represented groups.

- Transparency and Accountability: Encourage transparency in the development of new technologies, ensuring that the public understands how innovations are designed, implemented, and governed. Implementing accountability measures, such as independent audits and impact assessments, ensures that companies and innovators can be held.

- Skills and Competences: Next to regulations we need to support development of critical mindset and competences forced by the rise of AI that are crucial for individuals and organisations to thrive in an AI-driven world. These competences blend technical, ethical, and strategic skills, as AI impacts multiple sectors. AI and data literacy is essential to understanding the basics of AI—its capabilities, limitations, and applications. And how to introduce a healthy information diet – what to look for, what to avoid, and how to be selective.

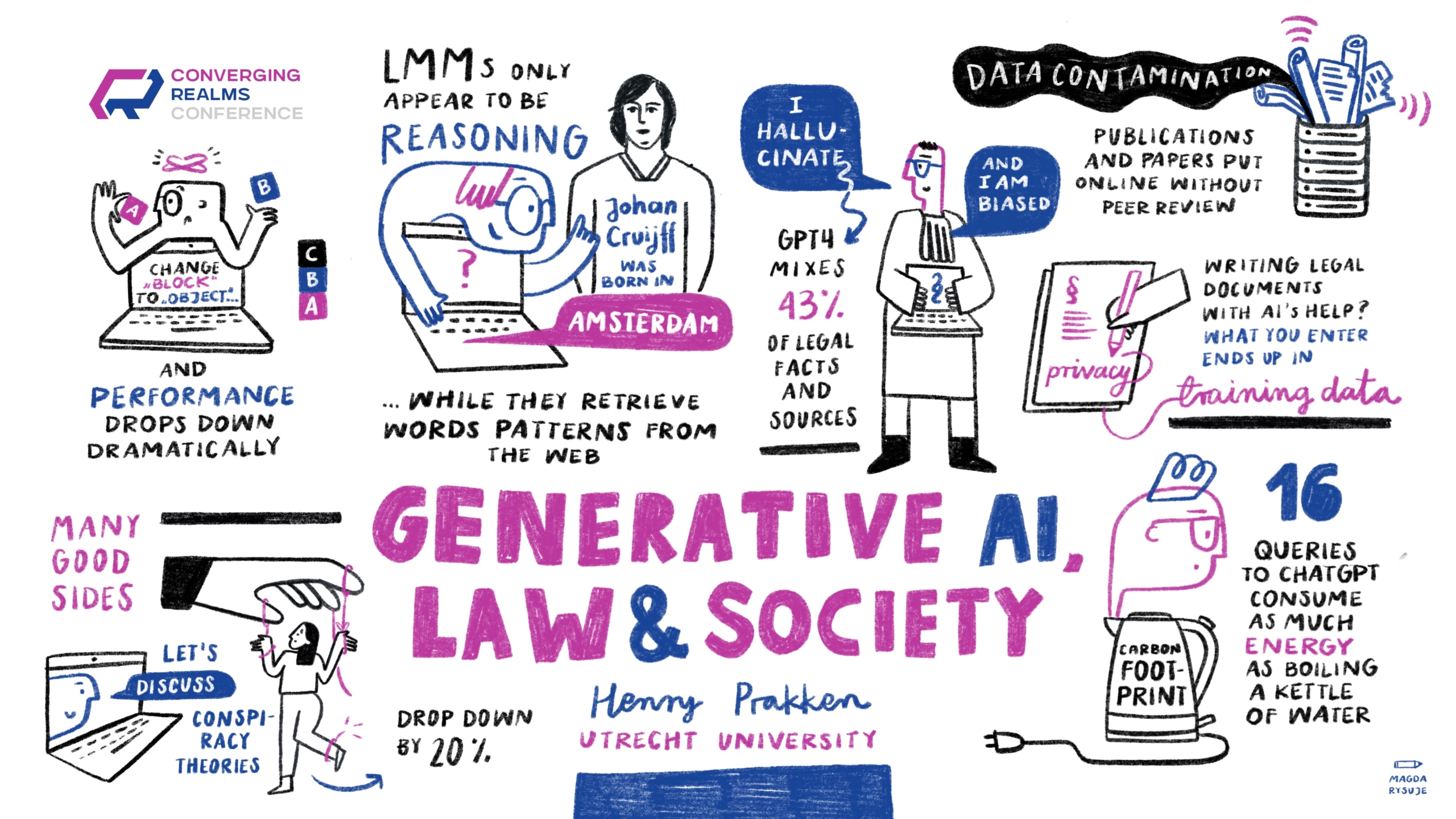

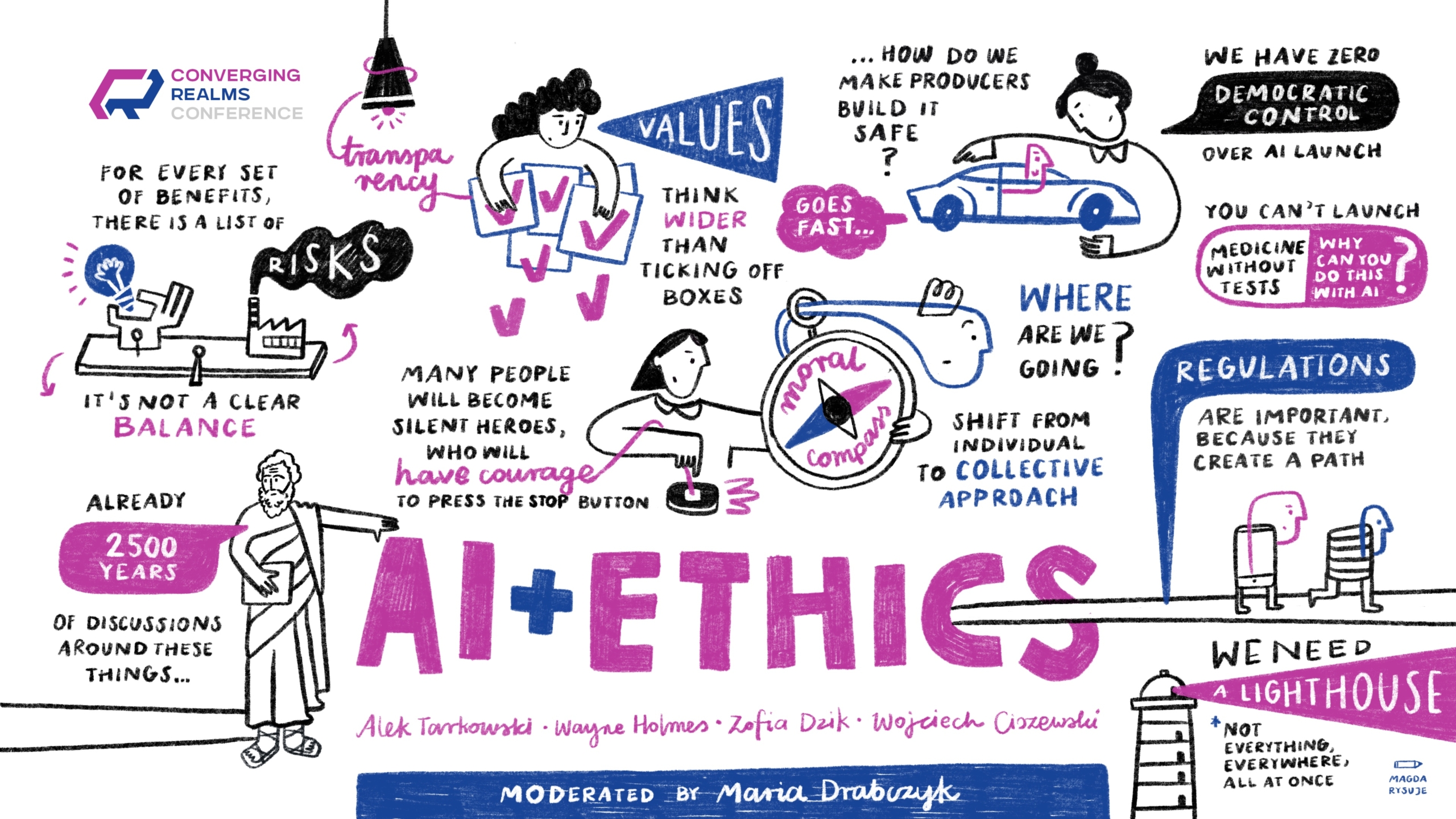

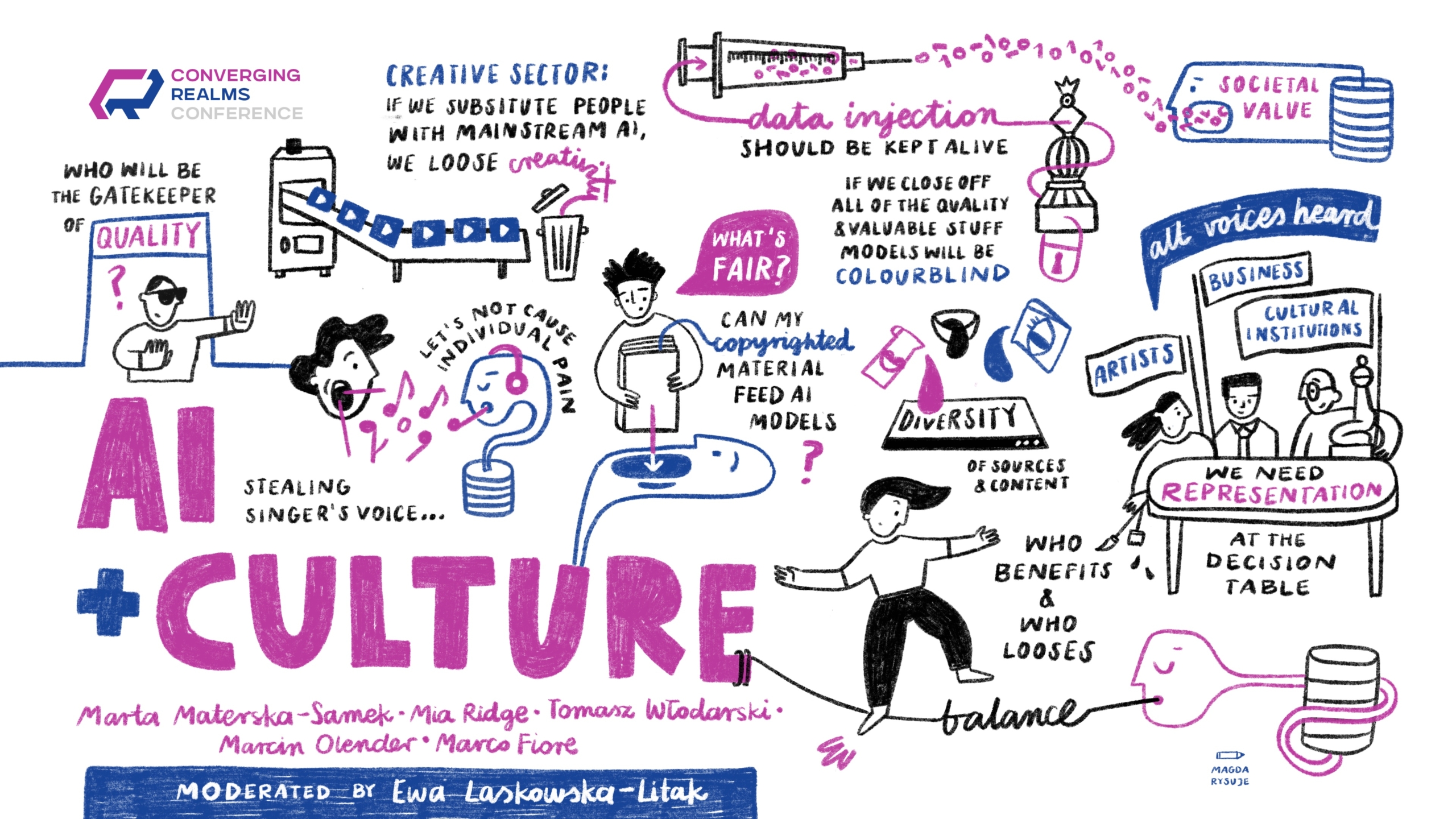

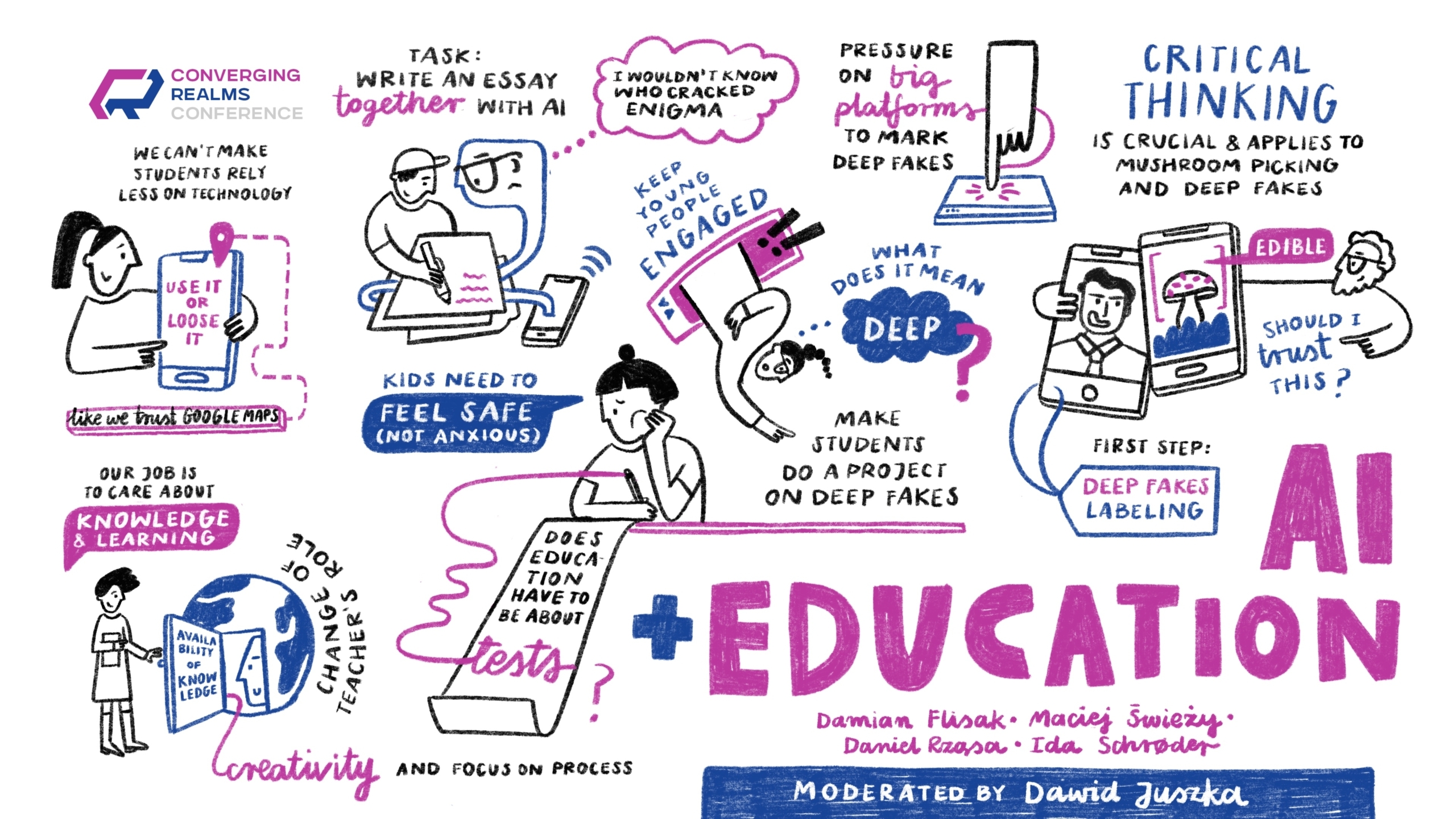

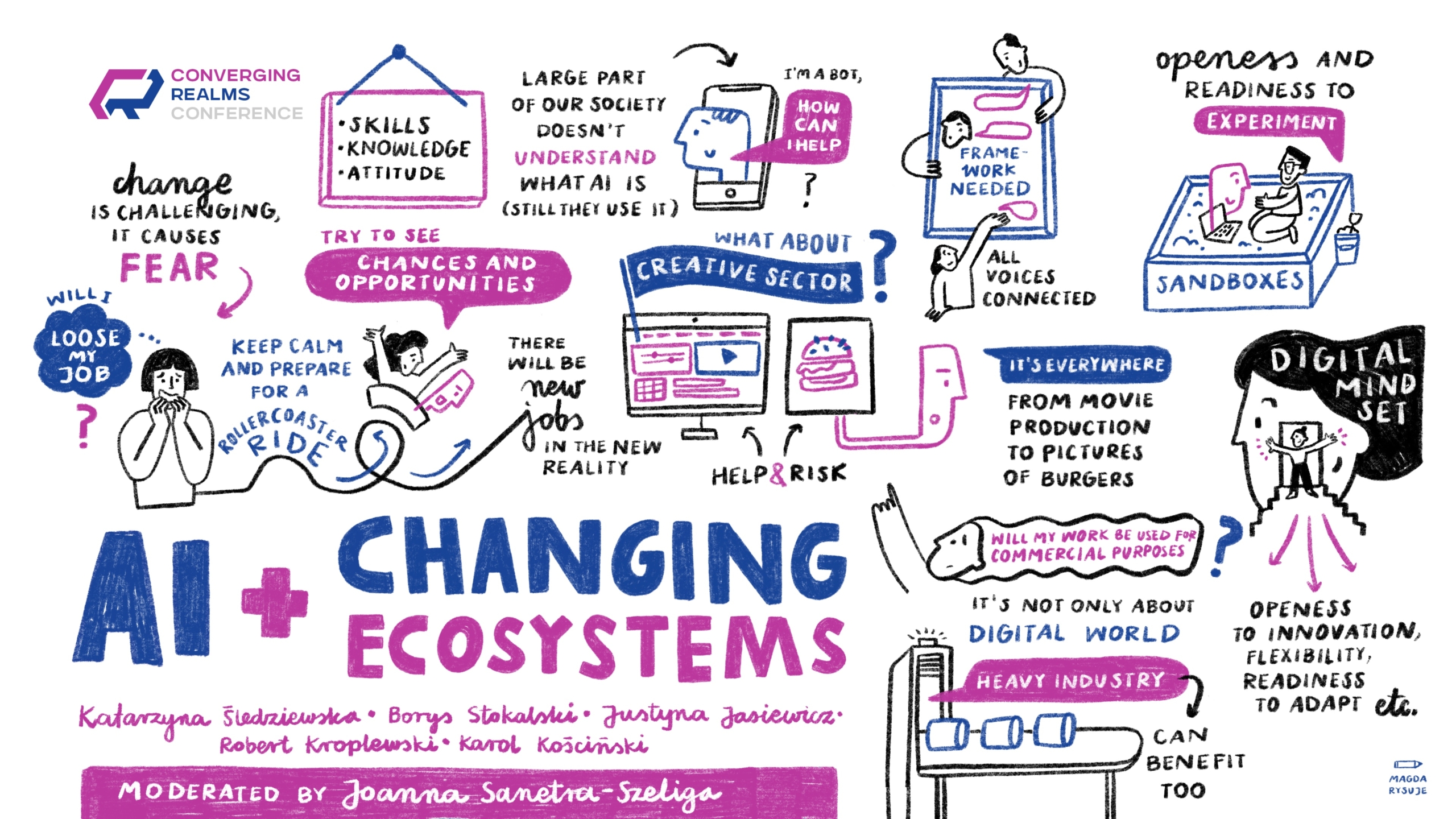

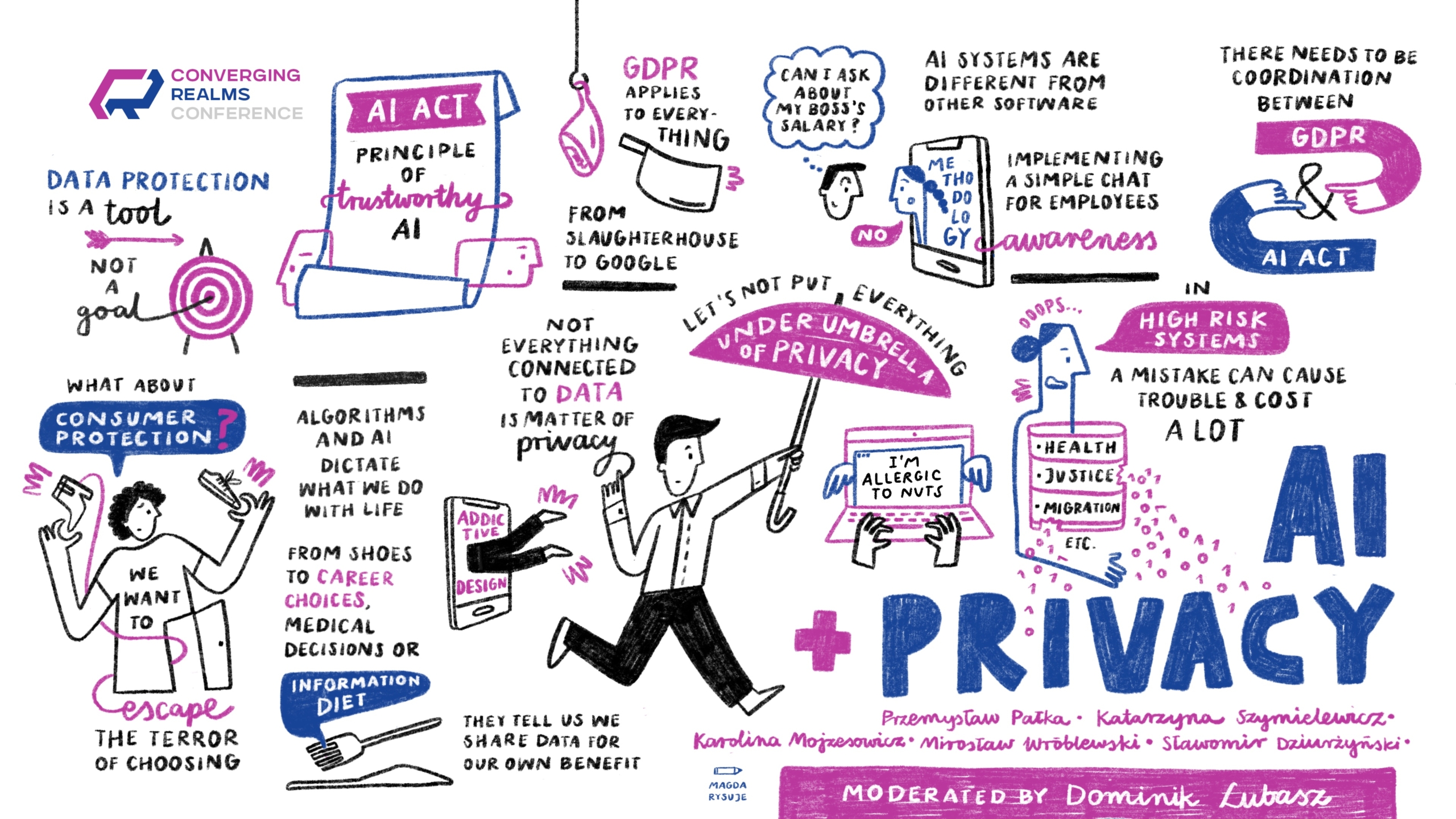

For more insights from each of the panels see the visual notes created by Magda Rysuje (below)!

Converging Realms: Law, Technology, and Society in the Age of Ethical and Multi-Agent AI

Kraków, September 26–27, 2024

The conference was co-organised by the Future Law Lab (FLL) at the Jagiellonian University and the Centrum Cyfrowe Foundation (FCC) with Lubasz i WspĂłlnicy, Kancelaria RadcĂłw Prawnych acting as a Strategic Partner. The conference was open and free of charge, thanks to funding from the Strategic Programme Excellence Initiative at Jagiellonian University and thanks to the support offered by the conference partners.Â

Honorary patronage of Rector of Jagiellonian University, President of Data Protection Office

Partners: UNESCO Polish National Commission, Society of Authors ZAiKS, Allegro, Google, Knowledge Rights 21

Patrons: Panoptykon Foundation, Polish Chamber of Information Technology and Telecommunications (PIIP), Polish Agency for Enterprise Development (PARP), Chamber of Digital Economy, Digital Poland Association, IAB Poland, Wolters Kluwer, NASK – a National Research Institute

Media patrons: Rzeczpospolita, Dziennik Gazeta Prawna

Visual notes

KEYNOTE DAY 1

Henry Prakken, Artificial Intelligence and Data Science division, Utrecht University

PANEL 1: AI + ETHICS

Wayne Holmes, International Research Centre On Artificial Intelligence

Alek Tarkowski, Open Future

Wojciech Ciszewski, Uniwersytet Jagielloński

Zofia Dzik, Centrum Etyki Technologii Instytutu Humanites

Moderacja: Maria Drabczyk, Centrum Cyfrowe

PANEL 2: AI + CULTURE

Mia Ridge, British Library

Marta Materska-Samek, Jagiellonian University

Marco Fiore, Michael Culture Association

Marcin Olender, Google

Tomasz Włodarski, Małopolski Instytut Kultury

Moderated by: Ewa Laskowska-Litak, Jagiellonian University

PANEL 3: AI+EDUCATION

Anna Bałdyga, Komisja Edukacji Rady Miasta Krakowa

Daniel RzÄ…sa, Google News Initiative

Ida Schrøder, Aarhus University

Moderated by: Dawid Juszka, AGH University of Krakow

KEYNOTE DAY 2 + ROUNDTABLE: AI + CHANGING ECOSYSTEM

Keynote: Katarzyna Ĺšledziewska, Digital Economy Lab, University of Warsaw

ROUND TABLE DISCUSSION:

Katarzyna Ĺšledziewska, Digital Economy Lab, University of Warsaw

Karol Kościński, Society of Authors ZAiKS

Robert Kroplewski, Global Partnership on Artificial Intelligence

Justyna Jasiewicz, PwC

Borys Stokalski, Polska Izba Informatyki i Telekomunikacji

Moderated by: Joanna Sanetra-Szeliga, Krakow University of Economic

PANEL 4: AI+RESEARCH

Maciej Maryl, Digital Humanities Centre, Polish Academy of Sciences

Maja Bogataj JanÄŤiÄŤ, Intellectual Property Institute, Knowledge Rights 21

Pamela Krzypkowska, Ministry of Digital Affairs

Maciej Piasecki, Wrocław School of Science and Technology

Konrad Gliściński, Jagiellonian University

Moderated by: Kuba Piwowar, Centrum Cyfrowe, SWPS

PANEL 5: AI + JUSTICE

Iga Bałos, ENOIK

Olivier Halupczok, Boldare

Marco Giacalone, Vrije Universiteit Brussel

Karolina Wilamowska, Women in Law

Moderated by: Tomasz Sroka, Jagiellonian University

PANEL 6: AI+PRIVACY

Karolina Mojzesowicz, European Commission

Przemysław Pałka, Jagiellonian University

Katarzyna Szymielewicz, Panoptykon

Mirosław Wróblewski, Personal Data Protection Office

Sławomir Dziurzyński, Allegro

Moderated by: Dominik Lubasz, Lubasz i WspĂłlnicy. Kancelaria RadcĂłw Prawnych

Visual notes drawn by Magda Rysuje.